Streamlit 学习笔记1

Streamlit 学习笔记1

文章目录

- Streamlit 学习笔记1

- 首先 安利下streamlit~🧐

- Answering Questions with HuggingFace Pipelines and Streamlit

- Build knowledge graphs with the Streamlit Agraph component

- 本文主要参考一些国外的博客(相当于一些搬运)

- Answering Questions with HuggingFace Pipelines and Streamlit - KDnuggets

- https://blog.streamlit.io/the-streamlit-agraph-component/

首先 安利下streamlit~🧐

我个人感觉streamlit真的非常棒,向大家安利一下

(看看AI能帮我写出怎样的安利文案👇)

-

prompt:Streamlit turns data scripts into shareable web apps in minutes. All in Python. All for free. No front‑end experience required. 请把我当成一个不会前后端的开发者,但是我想部署AI应用,向我推销streamlit

-

New Bing的回答:

Sure, I’d be happy to tell you more about Streamlit. Streamlit is a Python library that allows you to create interactive web applications from your data science and machine learning models. With Streamlit, you can create a web app in minutes without any front-end experience. You can use Streamlit to build a wide range of applications, including data visualizations, dashboards, and machine learning models.

Streamlit is a great tool for developers who want to deploy AI applications quickly and easily. With Streamlit, you can focus on building your models and leave the front-end development to the library. Streamlit is also free and open-source, so you can use it without any licensing fees.

I hope this helps! Let me know if you have any other questions.

-

chatgpt的回答:

当你需要部署一个AI应用时,你需要一个用户友好且易于部署的方式来展示和演示你的模型。Streamlit是一个开源的Python库,可以帮助你迅速将你的数据脚本转化为一个交互式的Web应用程序。

Streamlit提供了一些内置的组件,比如文本、图表、滑块和下拉菜单等,可以帮助你快速构建交互式界面。你可以使用它来展示数据、图形和机器学习模型的预测结果。此外,Streamlit 还支持 Jupyter Notebooks 和 Python 脚本的实时运行和部署。

使用Streamlit,你可以快速创建一个功能强大的Web应用程序,而无需任何前端经验。并且,由于它是免费的,并且支持Python,所以它是一个非常适合新手的工具。

总之,如果你想要快速地构建一个交互式的Web应用程序来展示你的AI应用,那么Streamlit是一个非常值得尝试的工具。

-

gpt-3.5-turbo (playground)

Streamlit是一个非常简单易用的Python库,可以帮助您快速将数据脚本转换为可共享的Web应用程序,您不需要任何前端或后端经验。使用Streamlit,您可以轻松构建交互式的AI应用,比如图像分类器、自然语言处理应用、数据可视化和预测模型等等。

Streamlit提供了许多内置的组件和工具,可以帮助您快速构建Web应用程序。例如,您可以使用Streamlit的数据框架组件将数据可视化,使用交互式滑块来调整模型参数,使用文本框来输入自定义文本等等。另外,Streamlit还提供了一些预训练的AI模型,可以帮助您快速构建高效的AI应用程序。

最重要的是,Streamlit是完全免费的。您不需要支付任何费用就可以使用它来构建自己的AI应用程序。因此,如果您想快速构建一个交互式的AI应用程序,Streamlit是一个非常不错的选择。

Answering Questions with HuggingFace Pipelines and Streamlit

-

练习一下虚拟环境的使用 (现在有了GitHub copilot cli 是真的方便多了!!!)

问问它:

github-copilot-cli what-the-shell how to create a conda environment with nlp pipelinesconda create -n streamlitLearning python=3.8 conda activate streamlitLearning conda install transformers conda install streamlit -

环境准备好了,此时命名一个文件为

transformerDemo.py -

代码如下

其中很多注释是由copilot帮我写的

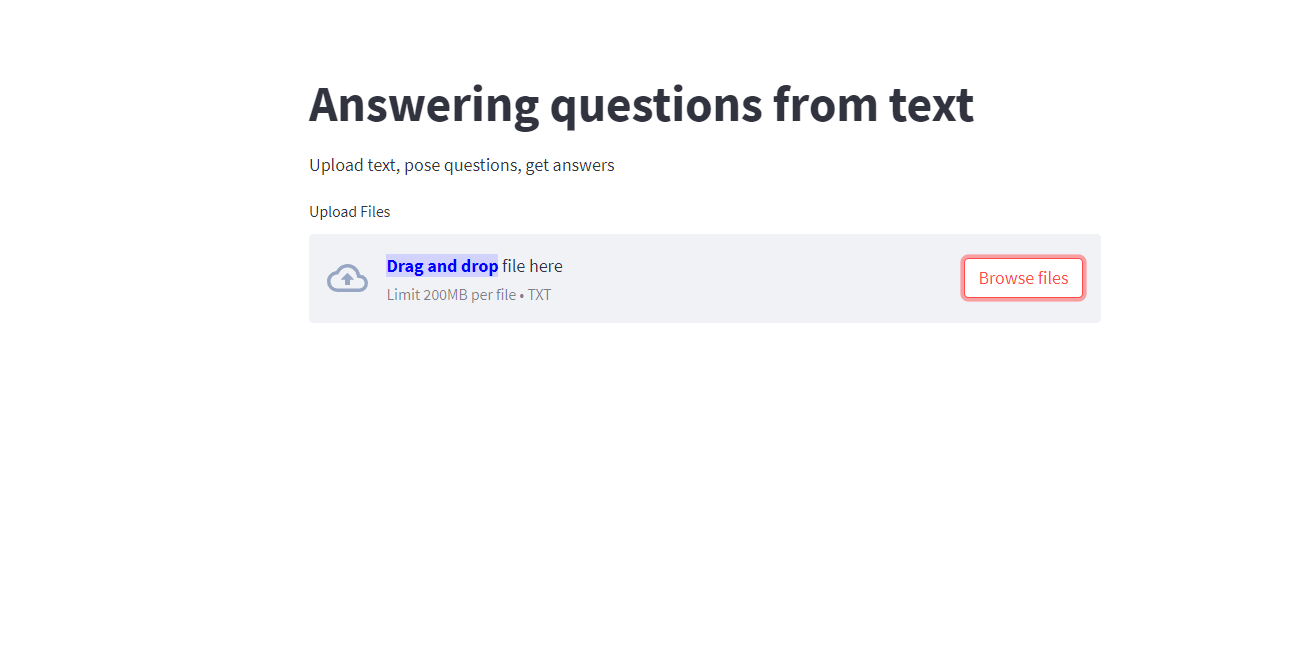

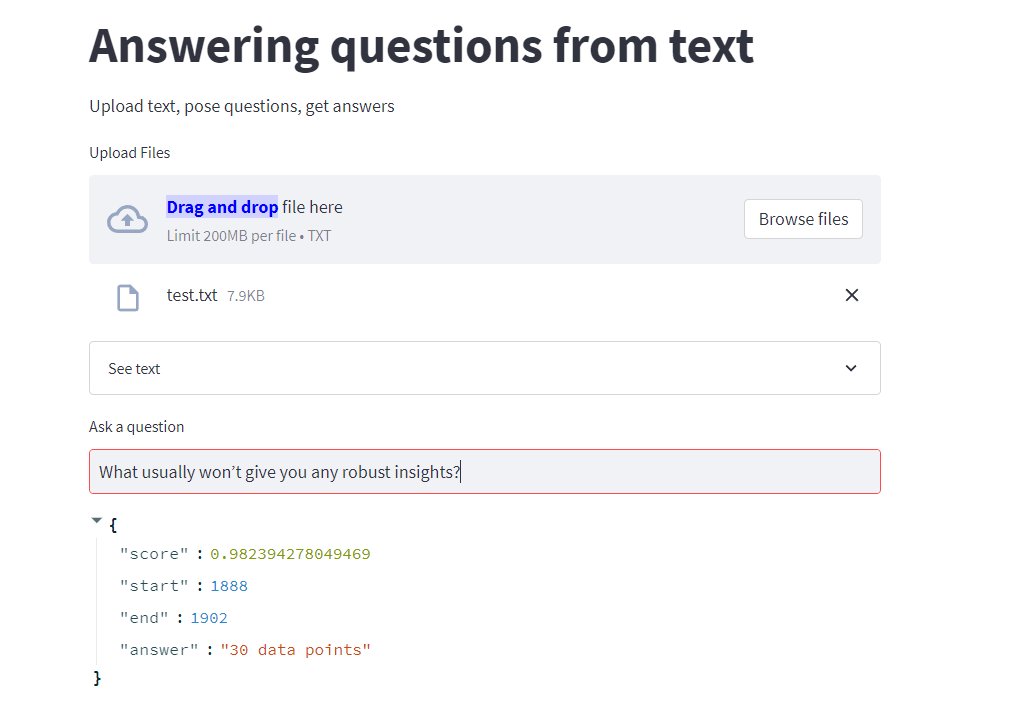

import streamlit as st # transformers是一个用于自然语言处理的python库,它提供了一系列的预训练模型,可以用于文本分类、文本生成、命名实体识别、问题回答、语义相似度、文本摘要、文本翻译、特征提取等任务。 # from transformers import pipeline 中的pipeline是一个高级API,可以用于快速使用预训练模型。 # pipeline具体的参数可以参考:https://huggingface.co/transformers/main_classes/pipelines.html from transformers import pipeline# load_file()函数用于加载文件,这里只加载txt文件,如果上传的文件不是txt文件,会提示“Unsupported file type”。 def load_file():"""Load text from file"""uploaded_file = st.file_uploader("Upload Files", type=['txt'])if uploaded_file is not None:if uploaded_file.type == "text/plain":raw_text = str(uploaded_file.read(), "utf-8")return raw_text# main()函数用于创建Streamlit应用的标题和描述,加载文件,显示文件内容,创建问题回答器,输入问题,获取答案。 if __name__ == "__main__":# App title and description# st.title()函数用于创建Streamlit应用的标题。st.title("Answering questions from text")# st.write()函数用于创建Streamlit应用的描述。st.write("Upload text, pose questions, get answers")# Load file# load_file会在页面上创建一个文件上传框,用户可以上传txt文件。raw_text = load_file()# 如果raw_text不为空,就显示文件内容。if raw_text != None and raw_text != '':# Display text# st.expander()函数用于创建一个可折叠的区域,用户可以点击标题来展开或折叠区域。with st.expander("See text"):st.write(raw_text)# Perform question answering# question_answerer = pipeline('question-answering')会创建一个问题回答器,用于回答问题。# pipeline('question-answering')的参数可以参考:https://huggingface.co/transformers/task_summary.html#question-answering# pipeline('question-answering')大概的流程是:输入问题和文本,模型会找到文本中最相关的句子,然后从这个句子中找到最相关的答案。question_answerer = pipeline('question-answering')answer = ''# st.text_input()函数用于创建一个文本输入框,用户可以在文本输入框中输入问题。# question 是文本输入框的标题,如果用户输入了问题,就会调用question_answerer()函数,获取答案。question = st.text_input('Ask a question')if question != '' and raw_text != '':answer = question_answerer({'question': question,'context': raw_text})st.write(answer) -

然后运行,只需要终端里面输入:

streamlit run transformerDemo.py-

遇到了报错

from streamlit.cli import main ModuleNotFoundError: No module named 'streamlit.cli'问了chatgpt,new bing,感觉回答不靠谱,去StackOverflow看的方案是 先 pip uninstall streamlit 然后再 pip install streamlit

-

但我连着梯子,之前pip设置过国内镜像,有梯子的时候用不了,然后我就用了

mamba uninstall然后再mamba install -

还是没用

-

然后又看到StackOverflow说是要安装 networkx,还是没啥效果

-

过了一会儿,玄学的事情发生了,它突然就好了,能用了…

-

-

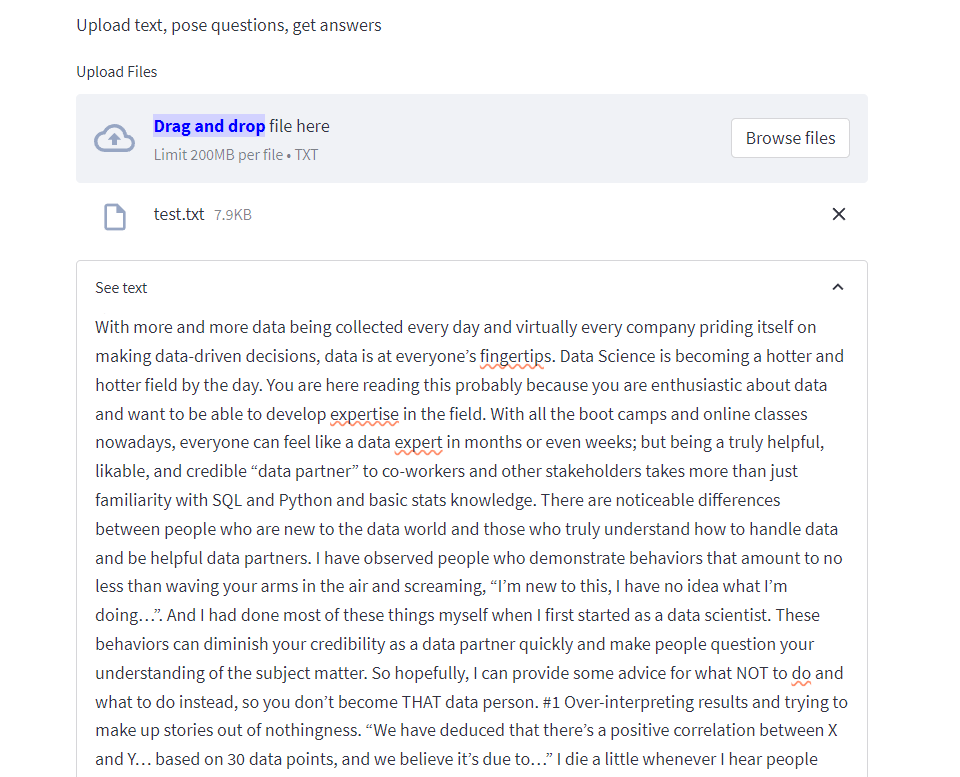

然后弄一个文本文件进去,文本内容来自:https://www.kdnuggets.com/2021/10/avoid-five-behaviors-data-novice.html

把里面的文本复制出来到

test.txtWith more and more data being collected every day and virtually every company priding itself on making data-driven decisions, data is at everyone’s fingertips. Data Science is becoming a hotter and hotter field by the day. You are here reading this probably because you are enthusiastic about data and want to be able to develop expertise in the field. With all the boot camps and online classes nowadays, everyone can feel like a data expert in months or even weeks; but being a truly helpful, likable, and credible “data partner” to co-workers and other stakeholders takes more than just familiarity with SQL and Python and basic stats knowledge. There are noticeable differences between people who are new to the data world and those who truly understand how to handle data and be helpful data partners. I have observed people who demonstrate behaviors that amount to no less than waving your arms in the air and screaming, “I’m new to this, I have no idea what I’m doing…”. And I had done most of these things myself when I first started as a data scientist. These behaviors can diminish your credibility as a data partner quickly and make people question your understanding of the subject matter. So hopefully, I can provide some advice for what NOT to do and what to do instead, so you don’t become THAT data person. #1 Over-interpreting results and trying to make up stories out of nothingness. “We have deduced that there’s a positive correlation between X and Y… based on 30 data points, and we believe it’s due to…” I die a little whenever I hear people make statements like the one above. When it comes to trend analysis and generating insights, the sample size is always the number one thing to consider. Unless it’s a focus group with people that are representative of your customer base (I even have doubts about survey results from focus groups, but that’s another topic), 30 data points usually won’t give you any robust insights. Is there anything more embarrassing than deducing “trends” from extremely small datasets? Yes, coming up with theories for why these “trends” are happening. I have seen people come up with all sorts of wild theories to explain why the results from tiny datasets are “counter-intuitive”; they lose their credibility along with most of the audience in the process when the real explanation is simple… it’s simply noise. Try this instead: Instead of jumping into trend analyses when the sample is small, focus on setting up structures to collect more data with better quality going forward to enable those analyses in the future. If you REALLY want to have some insights coming out of the small sample, caveat your finding with the lack of sample quantity and add a confidence interval to metrics that you report. #2 Not quality checking (QC) the data/query before using it. There are no perfect datasets out there; anyone who tells you otherwise is either lying or doesn’t know better. So as a data expert, you should know better than trusting data quality at face value. EVERY SINGLE PIECE of data you query and analyze needs to be quality checked — make sure tables are ACTUALLY deduped as they should be, check that timestamps are in the timezone you THINK they are, and so on. Not performing QC on the data before using it can cause unintended results and misleading insights and make people doubt your ability to deal with complicated data. Try this instead: Develop a QC framework (i.e., a list of tests you perform) and go through it every time you work with a new dataset. For example, check for (unexpected) duplicates; if you expect the data set in question to have one row per customer order, write a quick query to group by order id and count the number of rows — you will be surprised how many “order-level” tables have 1,000 records for some order ids. Always, always, always sanity-check your work, and double sanity-check with your stakeholders and subject matter experts. #3. Over-engineering things. I still remember the excitement after I learned about fancy models like the Random Forest or XGBoost; when you have a hammer, especially a shiny cool one, everything looks like a nail. But in reality, unless you are an ML engineer, you rarely need 10-layer neural networks in your day-to-day data work. Using fancy ML models when a simple linear regression suffices is not only inefficient but also counter-productive. As I mentioned in my article about data science lessons I learned from working at McKinsey, making a business impact is the number one goal when it comes to working as a data scientist in the industry, not showing off how much ML knowledge you have. Over-engineering models and analyses is a surefire way to make yourself the unpopular and ineffective data partner people want to avoid working with. Try this instead: Start simple, and only apply more complex methods if it’s truly necessary. Make very conscious decisions about the methodology you use in analyses, and apply the 80/20 rule to avoid unnecessary efforts that only bring marginal benefits, if at all. #4. Buzz word dropping. This one is very common among people who have just entered the data world. Similar to having the tendency to over-engineer things out of excitement about the new modeling skills, a lot of new data practitioners like to use all the new concepts and new words they learned whenever possible. When communicating, we tend to make up for our lack of understanding of things with complexity — the more buzz words a person uses when talking about ML and analytics, the less analytics he/she usually knows. A seasoned data practitioner should be able to explain the methodology and analytical details in plain English; if someone’s explanation of data work is as hard to understand as reading a Wikipedia page, it’s probably because they just read about it on Wikipedia too. Try this instead: When learning about a new analytical concept, really try to understand it to the point that you can easily explain it to your friends who are not data scientists… in plain English. This level of understanding will also help you decide when to apply the fancy but complicated approach and when to use the good old-fashioned linear regression. #5. Ignoring stakeholders’ needs when creating data products. Occasionally I meet new data practitioners who don’t only suffer from symptoms 3 and 4 above but carry their overzealousness so far as to create data pet projects at work that nobody appreciates but themselves. Don’t get me wrong, I think all enthusiasm in data should be encouraged, and pet projects are fun and helpful in developing skills… just not at your day job, where a business is counting on you to use data products to drive impact. Data products (e.g., dashboards) are just like any other product, the number one design rule for them should be user-centricity. They should be born out of needs… not just passion. Try this instead: Talk to your stakeholders before building any data product. Understand the business’ needs at the current stage: If it’s a startup, I bet your stakeholder won’t care too much about the format and color of the data visualizations you build but wants to instead focus on the accuracy of the data behind the visualizations and insights from them. Similarly, truly understand the audience and use case; for example, you would spend more time on a polished and simple user interface if the data product is intended to be used regularly by non-technical audiences. Develop your pet projects on the side, and maybe it will come in handy someday; just don’t let them get in the way of you being an effective and likable data partner. Key takeaways Don’t over-complicate or over-engineer things; it will NOT make you look smart, but will make you look like you don’t know what’s the most effective way of doing things. Make sure to QC your data and sanity-check your insights, and always caveat findings when data quality or the sample size is a concern. Have your stakeholders in mind when creating data products. -

然后传上去

-

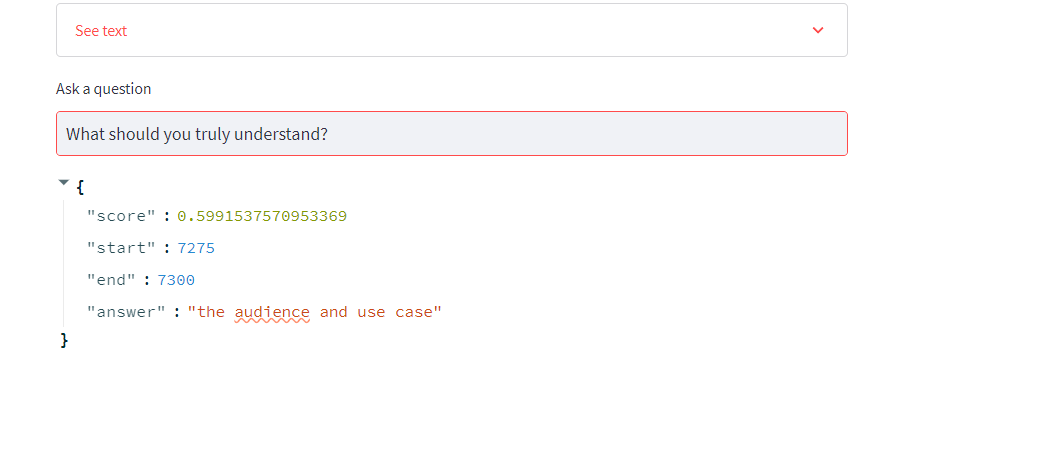

开始问问题

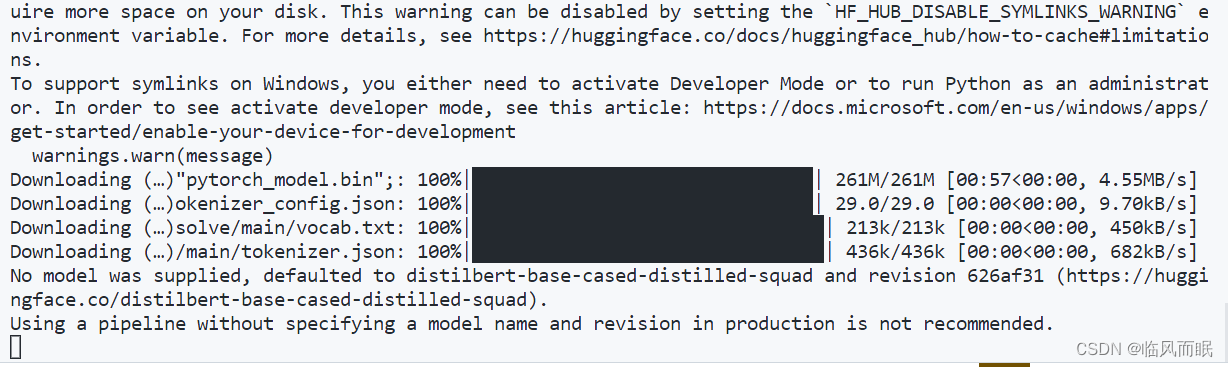

后台有一些warning

-

再多测试一些

Build knowledge graphs with the Streamlit Agraph component

参考:

- https://blog.streamlit.io/the-streamlit-agraph-component/

- https://github.com/ChrisDelClea/streamlit-agraph?ref=streamlit

-

关于pip和conda查看与设置镜像源:

https://www.cnblogs.com/pursuiting/p/14255231.html

https://blog.51cto.com/u_15077548/4372923

-

emmm 开着梯子,哪怕是断开连接, 我这里pip都会报错…

- 得把梯子的进程退出之后才能正常安装

-

有些东西pip能安装, conda和mamba安装不了(这和channel有关)

-

安装所需包

pip install Streamlit pip install streamlit-agraph pip install SPARQLWrapper -

用GitHub首页的代码加上copilot写了点注释,如下

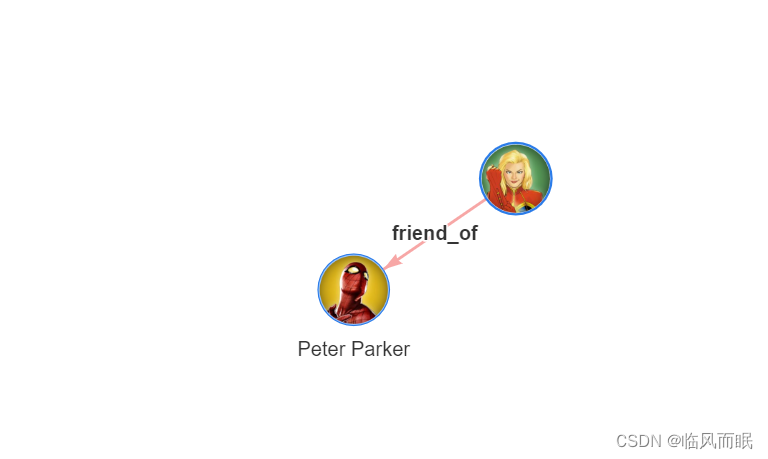

import streamlit # streamlit_agraph是一个用于在Streamlit中绘制图的python库,它可以绘制有向图、无向图、树、层次图、力导向图等。 # Config类用于配置图的样式,包括宽度、高度、是否有向、是否有物理引擎、是否有层次结构等。 # agraph()函数用于绘制图,它的参数包括节点、边、配置等。 # Node类用于创建节点,它的参数包括id、label、大小、形状、图片等。 # Edge类用于创建边,它的参数包括源、目标、标签等。 from streamlit_agraph import agraph, Node, Edge, Confignodes = [] edges = [] # 此处Node对象的id是指节点的id,label是指节点的标签,size是指节点的大小,shape是指节点的形状,image是指节点的图片。 nodes.append(Node(id="Spiderman",label="Peter Parker",size=25,shape="circularImage",image="http://marvel-force-chart.surge.sh/marvel_force_chart_img/top_spiderman.png")) # includes **kwargsnodes.append(Node(id="Captain_Marvel",size=25,shape="circularImage",image="http://marvel-force-chart.surge.sh/marvel_force_chart_img/top_captainmarvel.png")) # 此处Edge对象的source是指边的源,label是指边的标签,target是指边的目标。 edges.append(Edge(source="Captain_Marvel",label="friend_of",target="Spiderman",# **kwargs)) # 这里的Config类的参数包括宽度、高度、是否有向、是否有物理引擎、是否有层次结构等。 # python中的**kwargs是用来收集不定数量的关键字参数,它会将不定数量的关键字参数转换成字典。config = Config(width=750,height=950,directed=True,physics=True,hierarchical=False,# **kwargs)# return_value 返回的是agraph对象,它的参数包括节点、边、配置等。 # agraph在页面上显示 return_value = agraph(nodes=nodes,edges=edges,config=config) -

然后再使用streamlit run 跑起来

import streamlit # streamlit_agraph是一个用于在Streamlit中绘制图的python库,它可以绘制有向图、无向图、树、层次图、力导向图等。 # Config类用于配置图的样式,包括宽度、高度、是否有向、是否有物理引擎、是否有层次结构等。 # agraph()函数用于绘制图,它的参数包括节点、边、配置等。 # Node类用于创建节点,它的参数包括id、label、大小、形状、图片等。 # Edge类用于创建边,它的参数包括源、目标、标签等。 from streamlit_agraph import agraph, Node, Edge, Confignodes = [] edges = [] # 此处Node对象的id是指节点的id,label是指节点的标签,size是指节点的大小,shape是指节点的形状,image是指节点的图片。 nodes.append(Node(id="Spiderman",label="Peter Parker",size=25,shape="circularImage",image="http://marvel-force-chart.surge.sh/marvel_force_chart_img/top_spiderman.png")) # includes **kwargsnodes.append(Node(id="Captain_Marvel",size=25,shape="circularImage",image="http://marvel-force-chart.surge.sh/marvel_force_chart_img/top_captainmarvel.png")) # 此处Edge对象的source是指边的源,label是指边的标签,target是指边的目标。 edges.append(Edge(source="Captain_Marvel",label="friend_of",target="Spiderman",# **kwargs)) # 这里的Config类的参数包括宽度、高度、是否有向、是否有物理引擎、是否有层次结构等。 # python中的**kwargs是用来收集不定数量的关键字参数,它会将不定数量的关键字参数转换成字典。config = Config(width=750,height=950,directed=True,physics=True,hierarchical=False,# **kwargs)# return_value 返回的是agraph对象,它的参数包括节点、边、配置等。 # agraph在页面上显示 return_value = agraph(nodes=nodes,edges=edges,config=config)