一口一口吃掉yolov8(1)

创始人

2025-05-31 05:24:24

0次

1.目标

上一篇讲了怎么训练yolov8,

训练yolov8

但是如果只满足于此,我们就真的只是调参侠了。。。

所以为了更深入的理解大神的想法,也为了我们自己的代码能力的提升和深度学习的改造。我觉得应该把代码分解一下,可以更好的食用

1.前向网络

一般我们说的网络就是指前向网络,网络怎么反向训练的,我们一般不说,因为一般这个过程是框架自动完成的,但是训练不仅包括网络,还包括数据,loss。所以我们把yolov8分成3部分。第一部分就是前向网络。那么前向网络又可以分成3个backbone、neck、head

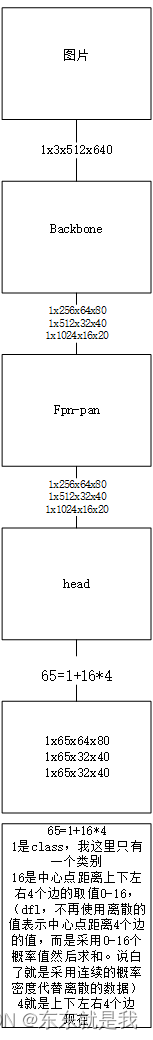

假设我们的输入是一个512*640的图片,数据集只有一个class。

0.basemodules

一些yolov8用到的模块,后面我们也可以增加自己的模块

# encoding=utf-8

import mathimport torch.nn as nn

import torch

from utils.module_utils import autopadclass CBA(nn.Module):default_act = nn.SiLU()def __init__(self, input_channel, output_channel, k=1, s=1, p=None, g=1, d=1, act=True):super().__init__()self.conv = nn.Conv2d(input_channel, output_channel, k, s, autopad(k, p, d), groups=g, dilation=d, bias=False)self.bn = nn.BatchNorm2d(output_channel)self.act = self.default_act if act is True else act if isinstance(act, nn.Module) else nn.Identity()def forward(self, x):return self.act(self.bn(self.conv(x)))class DWCBA(CBA):def __init__(self, input_channel, output_channel, k=1, s=1, d=1, act=True):super().__init__(input_channel, output_channel, k, s, g=math.gcd(input_channel, output_channel), d=d, act=act)class Bottleneck(nn.Module):def __init__(self, input_channel, output_channel, shortcut=True, g=1, k=(3, 3), e=0.5):super().__init__()c_ = int(output_channel * e)self.cv1 = CBA(input_channel, c_, k[0], 1)self.cv2 = CBA(c_, output_channel, k[1], 1, g=g)self.add = shortcut and input_channel == output_channeldef forward(self, x):return x + self.cv2(self.cv1(x)) if self.add else self.cv2(self.cv1(x))class BottleneckCSP(nn.Module):def __init__(self, input_channel, output_channel, n=1, shortcut=True, g=1, e=0.5):super(BottleneckCSP, self).__init__()c_ = int(output_channel * e)self.cv1 = CBA(input_channel, c_, 1, 1)self.cv2 = nn.Conv2d(input_channel, c_, 1, 1, bias=False)self.cv3 = nn.Conv2d(c_, c_, 1, 1, bias=False)self.cv4 = CBA(2 * c_, output_channel, 1, 1)self.bn = nn.BatchNorm2d(2 * c_)self.act = nn.SiLU()self.m = nn.Sequential(*(Bottleneck(c_, c_, shortcut, g, e=1.0) for _ in range(n)))def forward(self, x):y1 = self.cv3(self.m(self.cv1(x)))y2 = self.cv2(x)return self.cv4(self.act(self.bn(torch.cat(y1, y2), 1)))class C3(nn.Module):def __init__(self, input_channel, output_channel, n=1, shortcut=True, g=1, e=0.5):super(C3, self).__init__()c_ = int(output_channel * e)self.cv1 = CBA(input_channel, c_, 1, 1)self.cv2 = CBA(input_channel, c_, 1, 1)self.cv3 = CBA(2 * c_, output_channel, 1)self.m = nn.Sequential(*(Bottleneck(c_, c_, shortcut, g, k=((1, 1), (3, 3)), e=1.0) for _ in range(n)))def forward(self, x):return self.cv3(torch.cat(self.m(self.cv1(x)), self.cv2(x)), 1)class C2(nn.Module):def __init__(self, input_channel, output_channel, n=1, shortcut=True, g=1, e=0.5):super(C2, self).__init__()self.c = int(output_channel * e)self.cv1 = CBA(input_channel, 2 * self.c, 1, 1)self.cv2 = CBA(2 * self.c, output_channel, 1)self.m = nn.Sequential(*(Bottleneck(self.c, self.c, shortcut, g, k=((3, 3), (3, 3)), e=1.0) for _ in range(n)))def forward(self, x):a, b = self.cv1(x).split((self.c, self.c), 1)return self.cv2(torch.cat((self.m(a), b), 1))class C2f(nn.Module):def __init__(self, input_channel, output_channel, n=1, shortcut=True, g=1, e=0.5):super(C2f, self).__init__()self.c = int(output_channel * e)self.cv1 = CBA(input_channel, 2 * self.c, 1, 1)self.cv2 = CBA((2 + n) * self.c, output_channel, 1)self.m = nn.ModuleList(Bottleneck(self.c, self.c, shortcut, g, k=((3, 3), (3, 3)), e=1.0) for _ in range(n))def forward(self, x):y = list(self.cv1(x).split((self.c, self.c), 1))y.extend(m(y[-1]) for m in self.m)return self.cv2(torch.cat(y, 1))class C1(nn.Module):def __init__(self, input_channel, output_channel, n=1):super(C1, self).__init__()self.cv1 = CBA(input_channel, output_channel, 1, 1)self.m = nn.Sequential(*(CBA(output_channel, output_channel, 3) for _ in range(n)))def forward(self, x):y = self.cv1(x)return self.m(y) + yclass C3x(C3):def __init__(self, input_channel, output_channel, n=1, shortcut=True, g=1, e=0.5):super(C3x, self).__init__(input_channel, output_channel, n, shortcut, g, e)self.c_ = int(output_channel * e)self.m = nn.Sequential(*(Bottleneck(self.c_, self.c_, shortcut, g, k=((1, 3), (3, 1)), e=1) for _ in range(n)))class C3Ghost(C3):def __init__(self, input_channel, output_channel, n=1, shortcut=True, g=1, e=0.5):super(C3Ghost, self).__init__(input_channel, output_channel, n, shortcut, g, e)c_ = int(output_channel * e)self.m = nn.Sequential(*(GhostBottleneck(c_, c_) for _ in range(n)))class GhostCBA(nn.Module):def __init__(self, input_channel, output_channel, k=1, s=1, g=1, act=True):super(GhostCBA, self).__init__()c_ = output_channel // 2self.cv1 = CBA(input_channel, c_, k, s, None, g, act)self.cv2 = CBA(c_, c_, 5, 1, None, c_, act=act)def forward(self, x):y = self.cv1(x)return torch.cat((y, self.cv2(y)), 1)class GhostBottleneck(nn.Module):def __init__(self, input_channel, output_channel, k=3, s=1):super(GhostBottleneck, self).__init__()c_ = output_channel // 2self.conv = nn.Sequential(GhostCBA(input_channel, c_, 1, 1),DWCBA(c_, c_, k, s, act=False) if s == 2 else nn.Identity(),GhostCBA(c_, output_channel, 1, 1, act=False))self.shortcut = nn.Sequential(DWCBA(input_channel, input_channel, k, s, act=False),CBA(input_channel, output_channel, 1, 1, act=False)) if s == 2 else nn.Identity()def forward(self, x):return self.conv(x) + self.shortcut(x)1.1backbone

代码参考了paddleyolo

# encoding=utf-8import torch.nn as nn

import torch

from backbone.basemodules import CBA,C2f

from enhance.other import SPPCSPCclass YOLOv8CSPDarkNet(nn.Module):def __init__(self,return_idx=[2, 3, 4]):super(YOLOv8CSPDarkNet, self).__init__()self.return_idx=return_idxarch_setting=[[64, 128, 3, True, False], [128, 256, 6, True, False],[256, 512, 6, True, False], [512, 1024, 3, True, True]]base_channels=arch_setting[0][0]self.stem=CBA(3,base_channels,k=3,s=2)_output_channels=[base_channels]self.csp_dark_blocks=[]for i,(input_channel,output_channel,num_blocks,shortcut,use_sppf) in enumerate(arch_setting):_output_channels.append(output_channel)stage=[]conv_layer=CBA(input_channel,output_channel,3,2)c2f_layer=C2f(output_channel,output_channel,num_blocks,shortcut)stage.append(conv_layer)stage.append(c2f_layer)if use_sppf:sppf_layer=SPPCSPC(output_channel,output_channel)stage.append(sppf_layer)self.csp_dark_blocks.append(nn.Sequential(*stage))self._output_channels=[_output_channels[i] for i in self.return_idx]self.strides = [[2, 4, 8, 16, 32, 64][i] for i in self.return_idx]def forward(self,x):outputs=[]x=self.stem(x)for i , layer in enumerate(self.csp_dark_blocks):x=layer(x)if i+1 in self.return_idx:outputs.append(x)return outputsinput=torch.randn(1,3,512,640)

model=YOLOv8CSPDarkNet()

output=model(input)

print(output[0].shape)1.2pafpn

neck模块 ,其实就是一些特征融合

# encoding=utf-8

import torch.nn as nn

import torch

from backbone.basemodules import C2f,CBA

from utils.module_utils import Concatclass YOLOV8C2FPAN(nn.Module):def __init__(self,n=3,input_chaneels=[256,512,1024]): # n cspbottleneck 个数super(YOLOV8C2FPAN, self).__init__()self.input_channels=input_chaneelsself._output_channels=input_chaneelsself.concat=Concat(1)self.upsample=nn.Upsample(scale_factor=2,mode='nearest')# fpnself.fpn_p4=C2f(int(input_chaneels[2]+input_chaneels[1]),input_chaneels[1],n)self.fpn_p3=C2f(int(input_chaneels[1]+input_chaneels[0]),input_chaneels[0],n)# panself.down_conv2=CBA(input_chaneels[0],input_chaneels[0],k=3,s=2)self.pan_n3=C2f(int(input_chaneels[0]+input_chaneels[1]),input_chaneels[1],n)self.down_conv1 = CBA(input_chaneels[1], input_chaneels[1], k=3, s=2)self.pan_n4 = C2f(int(input_chaneels[1] + input_chaneels[2]), input_chaneels[2], n)def forward(self,x):[c3,c4,c5]=x# fpnup_x1=self.upsample(c5)f_concat1=self.concat((up_x1,c4))f_out1=self.fpn_p4(f_concat1)up_x2=self.upsample(f_out1)f_concat2=self.concat((up_x2,c3))f_out0=self.fpn_p3(f_concat2)#pandown_x1=self.down_conv2(f_out0)p_concat1=self.concat((down_x1,f_out1))pan_out1=self.pan_n3(p_concat1)down_x2=self.down_conv1(pan_out1)p_concat2=self.concat((down_x2,c5))pan_out0=self.pan_n4(p_concat2)return [f_out0,pan_out1,pan_out0]c3=torch.randn(1,256,64,80)

c4=torch.randn(1,512,32,40)

c5=torch.randn(1,1024,16,20)

input=[c3,c4,c5]

m=YOLOV8C2FPAN()

output=m(input)

print(output[0].shape)

print(output[1].shape)

print(output[2].shape)

1.3 head

yolov8的检测头模块,这里只写了训练的部分,推理部分下次补上

# encoding=utf-8

import torch.nn as nn

import torch

from backbone.basemodules import CBA

class Detect(nn.Module):def __init__(self,nc=1,ch=()):super(Detect, self).__init__()self.nc=ncself.nl=len(ch)self.reg_max=16 # ch[0] // 16 l r t d 除以stride 后 一定落在[0-16]区间 当然,如果图像大且检测物大 这个数也要大self.no=nc+self.reg_max*4self.stride=torch.zeros(self.nl)c2,c3=max((16,ch[0]//4,self.reg_max*4)),max(ch[0],self.nc)self.cv2=nn.ModuleList(nn.Sequential(CBA(x,c2,3),CBA(c2,c2,3),CBA(c2,4*self.reg_max,1)) for x in ch)self.cv3=nn.ModuleList(nn.Sequential(CBA(x,c3,3),CBA(c3,c3,3),nn.Conv2d(c3,self.nc,1)) for x in ch)def forward(self,x):for i in range(self.nl):x[i]=torch.cat((self.cv2[i](x[i]),self.cv3[i](x[i])),1)if self.training:return xfrom necks.yolov8_pafpn import YOLOV8C2FPAN

c3=torch.randn(1,256,64,80)

c4=torch.randn(1,512,32,40)

c5=torch.randn(1,1024,16,20)m=YOLOV8C2FPAN()

m1=Detect(ch=([256,512,1024]))

output=m([c3,c4,c5])

output=m1(output)

print(output[0].shape)1.4 yolov8

把前面的3个部分组合一下就是我们的前向推理网络了

class Yolov8(nn.Module):def __init__(self, backbone, neck, head):super(Yolov8, self).__init__()self.backbone = backboneself.neck = neckself.head = headdef forward(self, x):x = self.backbone(x)x = self.neck(x)return self.head(x)

backbone = YOLOv8CSPDarkNet()

neck = YOLOV8C2FPAN()

head = Detect(nc=1, ch=[256, 512, 1024])

model = Yolov8(backbone, neck, head)

input=torch.randn(1,3,512,640)

output=model(input)

print(output[0].shape)

相关内容

热门资讯

电视安卓系统哪个品牌好,哪家品...

你有没有想过,家里的电视是不是该升级换代了呢?现在市面上电视品牌琳琅满目,各种操作系统也是让人眼花缭...

安卓会员管理系统怎么用,提升服...

你有没有想过,手机里那些你爱不释手的APP,背后其实有个强大的会员管理系统在默默支持呢?没错,就是那...

安卓系统软件使用技巧,解锁软件...

你有没有发现,用安卓手机的时候,总有一些小技巧能让你玩得更溜?别小看了这些小细节,它们可是能让你的手...

安卓系统提示音替换

你知道吗?手机里那个时不时响起的提示音,有时候真的能让人心情大好,有时候又让人抓狂不已。今天,就让我...

安卓开机不了系统更新

手机突然开不了机,系统更新还卡在那里,这可真是让人头疼的问题啊!你是不是也遇到了这种情况?别急,今天...

安卓系统中微信视频,安卓系统下...

你有没有发现,现在用手机聊天,视频通话简直成了标配!尤其是咱们安卓系统的小伙伴们,微信视频功能更是用...

安卓系统是服务器,服务器端的智...

你知道吗?在科技的世界里,安卓系统可是个超级明星呢!它不仅仅是个手机操作系统,竟然还能成为服务器的得...

pc电脑安卓系统下载软件,轻松...

你有没有想过,你的PC电脑上安装了安卓系统,是不是瞬间觉得世界都大不一样了呢?没错,就是那种“一机在...

电影院购票系统安卓,便捷观影新...

你有没有想过,在繁忙的生活中,一部好电影就像是一剂强心针,能瞬间让你放松心情?而我今天要和你分享的,...

安卓系统可以写程序?

你有没有想过,安卓系统竟然也能写程序呢?没错,你没听错!这个我们日常使用的智能手机操作系统,竟然有着...

安卓系统架构书籍推荐,权威书籍...

你有没有想过,想要深入了解安卓系统架构,却不知道从何下手?别急,今天我就要给你推荐几本超级实用的书籍...

安卓系统看到的炸弹,技术解析与...

安卓系统看到的炸弹——揭秘手机中的隐形威胁在数字化时代,智能手机已经成为我们生活中不可或缺的一部分。...

鸿蒙系统有安卓文件,畅享多平台...

你知道吗?最近在科技圈里,有个大新闻可是闹得沸沸扬扬的,那就是鸿蒙系统竟然有了安卓文件!是不是觉得有...

宝马安卓车机系统切换,驾驭未来...

你有没有发现,现在的汽车越来越智能了?尤其是那些豪华品牌,比如宝马,它们的内饰里那个大屏幕,简直就像...

p30退回安卓系统

你有没有听说最近P30的用户们都在忙活一件大事?没错,就是他们的手机要退回安卓系统啦!这可不是一个简...

oppoa57安卓原生系统,原...

你有没有发现,最近OPPO A57这款手机在安卓原生系统上的表现真是让人眼前一亮呢?今天,就让我带你...

安卓系统输入法联想,安卓系统输...

你有没有发现,手机上的输入法真的是个神奇的小助手呢?尤其是安卓系统的输入法,简直就是智能生活的点睛之...

怎么进入安卓刷机系统,安卓刷机...

亲爱的手机控们,你是否曾对安卓手机的刷机系统充满好奇?想要解锁手机潜能,体验全新的系统魅力?别急,今...

安卓系统程序有病毒

你知道吗?在这个数字化时代,手机已经成了我们生活中不可或缺的好伙伴。但是,你知道吗?即使是安卓系统,...

奥迪中控安卓系统下载,畅享智能...

你有没有发现,现在汽车的中控系统越来越智能了?尤其是奥迪这种豪华品牌,他们的中控系统简直就是科技与艺...